URL classification is a process of collecting the contents and other meta data about given URL and using this to classify URL, according to specific methodology and objectives.

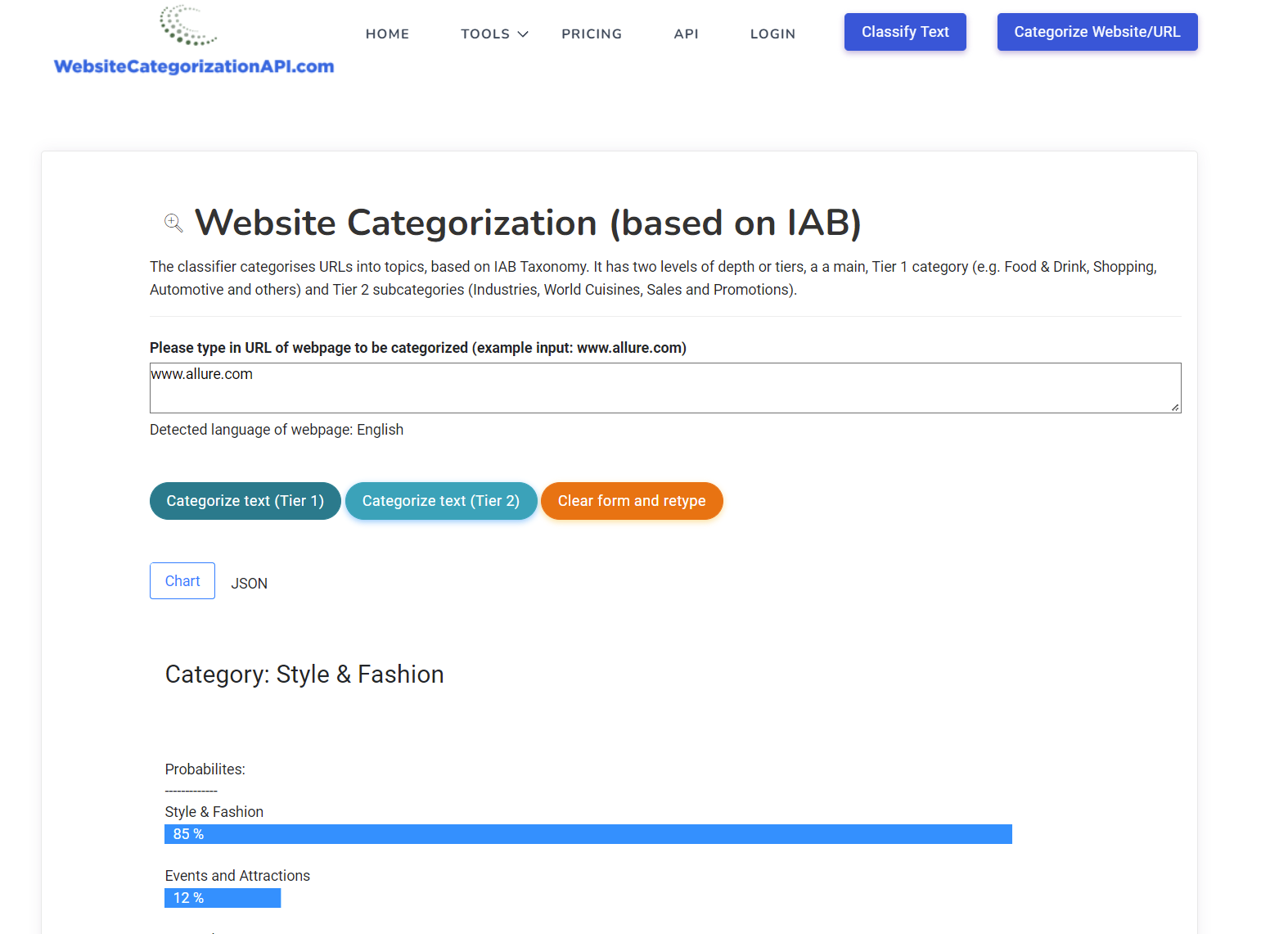

We have developed one of the most accurate URL classification APIs, with websites classified in over 1000 content categories. You can try it out (for free) here:

URL categorization of websites — cybersecurity

An important objective for URL categorization arises in the context of cybersecurity, where we want to protect our systems and sensitive information from digital attacks.

A typical example of latter is the practice of phishing — creating a counterfeit website that tries to mimic the genuine website with the purpose of obtaining critical information from the user.

Phishing attacks can use a variety of methods, from link manipulation (making malicious URL look like legitimate URL), evasion of filters (using images of websites), forgery of website (injecting javascript or other code at legitimate website), social engineering and others.

When building a machine learning mode for malicious websites identification, a given URL may be binary classified as either “malicious/not safe” or “safe”.

There are many other ways to classify URL, one of them, content categorization, will be discussed in more detail in the second post.

Goals of this article

This is part 1 of our multi-post article that will focus on several different topics:

- we will introduce you to steps that are necessary to build an URL categorization service that can categorize websites as malicious / not malicious (safe)

- we will discuss how one builds a machine learning model that can perform domain categorization, based on domain content/text

- we will provide a free URL database with around 1 million domains classified into 21 content categories (IAB taxonomy), together with in-depth analysis of data set

The URL classification is usually done on a large number of websites, thus it is almost always automated. With the rise of machine learning and deep learning models in last decade, the automation for URL classification is thus usually done by a machine learning model.

Training data set for URL categorization ML models

When building a supervised machine learning model, the first step is obtaining the necessary labelled data — training data set, on which to build the ML model.

In our case, what we need is a list of malicious URLs that have been manually checked and labelled as such.

One great source for this purpose can be found here: https://www.unb.ca/cic/datasets/url-2016.html.

We need two classes of URLS (“safe” and “not safe), the authors collected 35,300 URLs from Alexa top sites that can be considered as safe.

For malicious URLs they selected the following types:

- Spam URLs from WEBSPAM-UK2007 collection

- Phishing URLs collected from OpenPhish website.

- Malware URLs obtained from the project DNS-BH which keeps the list of malware URLs.

- Finally, Defacement URLs were those Alexa ranked trusted websites that were hosting fraudulent or hidden URL with malicious web pages.

In addition to this, we also collected malicious URLs by using the free API from https://www.phishtank.com.

Feature engineering

Once we have the appropriate training data set, the next step is to think about the feature engineering, i.e. defining features that will be computed from our data items which will then be used in the machine learning model as the input.

We will be using features collected from a large number of research papers on this topic (for full list please refer to Appendix — Resources at the end of the post).

URL features considered were:

- does URL use IP (e.g. http://21.12.42.215/index.html)

- is URL using a shortening service, e.g. bit.ly/2aDMTE

- number of dots in URL

- number of sensitive words in URL (as defined by Garera et al., see references for more information)

- number of days since registration of domain

- the length of URL

- is domain out of expected location in URL

- is favicon being loaded from the domain that is different that the in URL address bar

- presence of @ symbol

- existence of double slash redirection using //

- prefix, suffix in URL. These are used to make the domain look more like legitimate website.

- using port that is not standard

- is URL using subdomain, e.g. http://pay.domain.com

- are any objects (e.g. image, video) being loaded from another domain than the one being used

- is HTTP protocol being used

- links that are being used in tags

- number of redirects

- is there javascript that shows fake URL in status bar

- is URL using pop-up window

- presence of IFrame

- is HTTPS protocol being used

- OpenPageRank of domain, using https://www.domcop.com/openpagerank/what-is-openpagerank. which measures the backlink profile strength. Legitimate domains tend to have higher OpenPageRank.

- is URL indexed in Google search engine or not.

Machine Learning Models for URL classification

Next step is to consider which machine learning models are appropriate for URL classification model based on these features.

Given the form of features, one can consider the following ML models (though the list is by no means exhaustive):

– random forests

– decision trees

– logistic regression

– adaboost

– xgboost (https://xgboost.readthedocs.io/en/stable/)

– neural nets

During training of ML models it is useful to also use explainability libraries like LIME; Partial Dependence Plot and SHAP (https://github.com/slundberg/shap) to better understand which features are most important for driving predictions of the ML model. In our next post, we will do this in practice, when we will build an URL classification model from scratch.

Offline databases or real-time, live full URL-path categorization

In example above, we considered a specific URL classification model which helps us answer whether a given URL is malicious or not. This service can either be real-time in the sense that it fetches the URL on the fly, as soon as you submit it, then sends it to ML model, which computes the features that we discussed above, then based on these predicts whether we are dealing with problematic URL or not.

It can however also work from the offline data set of malicious URLs, where the URL submitted could be just checked against existing offline URL database. The advantage of this is that it is much faster, because you do not have to wait for the URL to be fetched and processed. So many applications actually use offline URLs database.

Advantage of real-time URL classification however is that you can classify URLs that were not seen before. Or e.g. published just a short while ago, so the URL classification bots of the service have not yet checked it. This approach is also a bit more safe because whereas some URL could be safe e.g. 1 week ago, it could be hijacked in the meantime and is not safe anymore.

Other use cases of URL categorization

URL categorization is not used only for analysing safety of websites. One very common use is to do a URL category check in terms of URL content.

A company e.g. may be interested in not allowing employees use shopping or gaming websites so a filtering system set up needs to know the content category of each domain that may be visited. There are two options available here, one, most often is to use an offline content categorization database of domains (there are more than 350+ million domains on the web) and the domain of URL requested by the user is then quickly checked for category against this this database.

So if user wants to visits Netflix and the content category that comes back from DB is TV, then the filtering system would block access.

Offline content categorization databases can be used for other purposes as well. E.g. a platform with millions of domains would like to provide information about the content category of each domain to the users of its platform.

Appendix

Resources:

https://arxiv.org/pdf/2009.11116.pdf

https://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.142.4092&rep=rep1&type=pdf

https://www.ml.cmu.edu/research/dap-papers/dap-guang-xiang.pdf